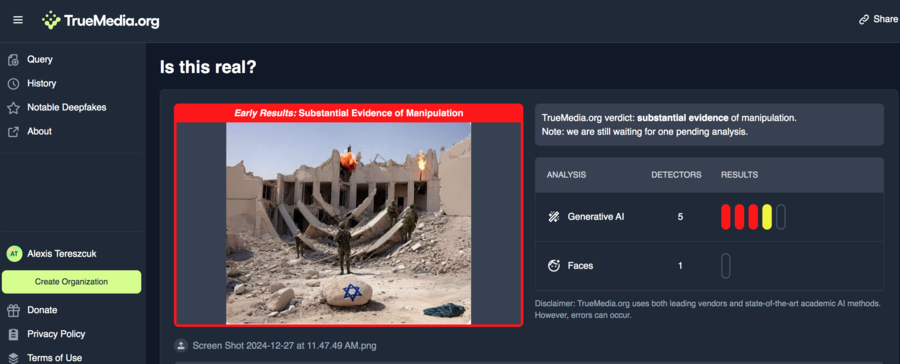

Is a photo that shows Israeli soldiers in front of ruins in Gaza forming a Hanukkah menorah authentic? No, that's not true: This image is fake. The image creator told Lead Stories that he made it "using AI and some Photoshop edits afterward."

The claim appeared in a post (archived here) published on X on December 25, 2024. A caption read:

What an incredible shot. Talk about turning darkness into light!

This is what the post looked like on X at the time of writing:

(Source: X screenshot taken on Fri Dec 27 19:25:52 2024 UTC)

(Source: X screenshot taken on Fri Dec 27 19:25:52 2024 UTC)

The image shows four soldiers standing in front of a destroyed building with ruins that form the shape of a menorah. The Star of David is painted on a foreground boulder, implying that the ruins are in Gaza amid the Hamas-Israel war that began in October 2023.

Lead Stories searched (archived here) for the image through Google Lens and found a December 2023 article posted on the website Lets AI (archived here) that credited the image to an artist named Yuval Luzon.

In a message on Instagram received on December 27, 2024, Luzon (archived here) told Lead Stories that he fabricated the image and sent it to his family's Whatsapp group on December 7, 2023, the first day of Hanukkah that year. He described how he made the image, writing:

I created this photo using AI and some photoshop edits afterwards. It isn't real and I must admit I was surprised myself the the amount of people of believed it was real.

Luzon said the image is "definitely not real, and there are some AI quirks that can be detected quite easily," which he chose not to correct:

I saw these things before I sent it a year ago, and I could have fixed it if I wanted, but I'm really against making fake news and I wanted to keep it obvious that it's not real in second look but keep the rirst impression as kind of real.

He pointed to the soldier on the left, who is headless, as this zoomed-in screenshot from the image shows:

(Source: X screenshot taken on Fri Dec 27 23:11:22 2024 UTC)

(Source: X screenshot taken on Fri Dec 27 23:11:22 2024 UTC)

Luzon explained to Lead Stories why he created the image:

It was exactly two months after October 7th, and I guess people needed something that made them feel like we are winning again, after that awful time of despair and sadness.

He wrote that he didn't want to create something that people would consider truth:

I don't think that the worst thing that can happen with AI is that people will start believing photos of events that never happened. I think that the worst thing that can happen with AI is that people will stop believing photos of events that did happen.

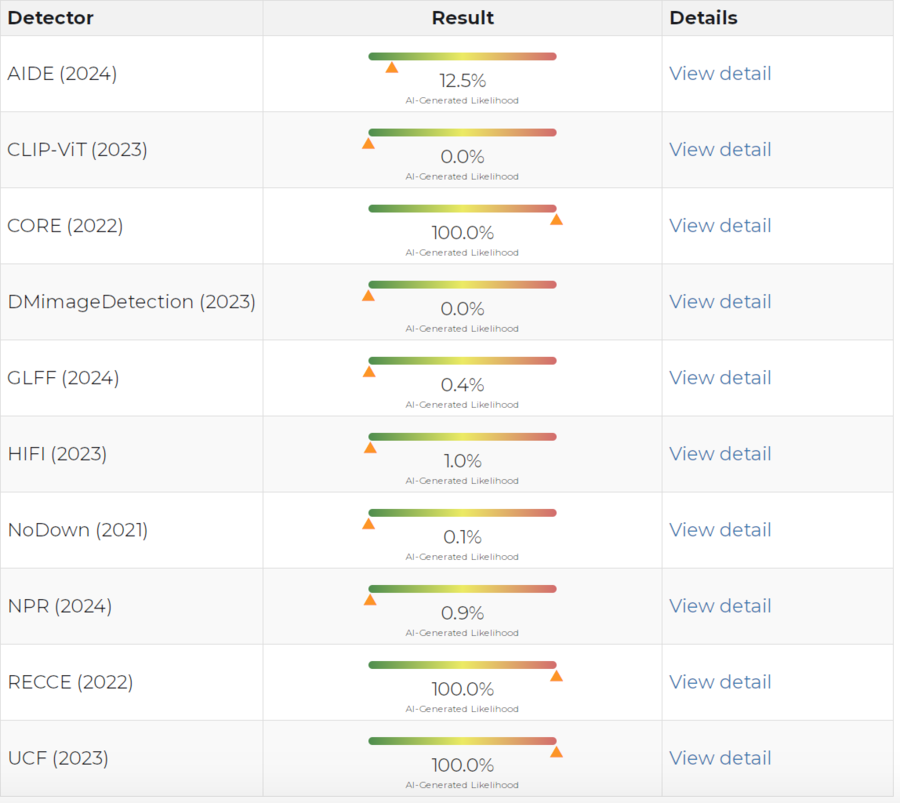

The AI detection tool TrueMedia (archived here) found "substantial evidence of manipulation," as shown in a screenshot of the results below:

(Source: TrueMedia website screenshot taken on Fri Dec 27 19:27:11 2024 UTC)

DeepFake-o-meter, another AI detection tool, also determined it has a 100 percent likelihood of being generated using AI, as this screenshot of its report shows:

(Source: DeepFake-O-Meter website screenshot taken on Fri Dec 27 19:29:23 2024 UTC)

Read more

Other Lead Stories fact checks about the Hamas Israel war can be found here.