Does an image of a shirtless boxer in black briefs show new evidence that the Olympic women's boxing champion from Algeria, Imane Khelif, is really a man? No, that's not true: This photo is fake and was posted on X by an account that publishes only AI-generated images. It has several telltale signs of AI such as strange, glyph-like text on signs and deformed hands of people in the background.

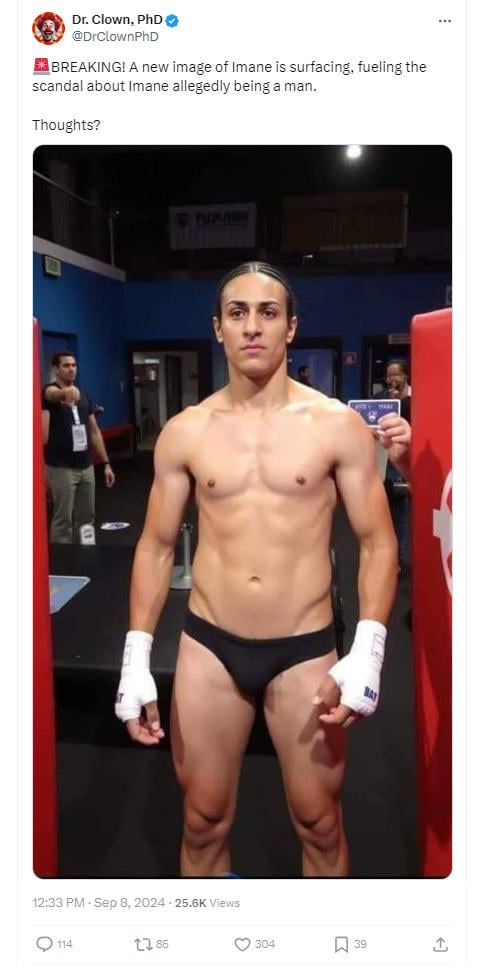

The photo surfaced in a post (archived here) on X on September 8, 2024. The post was captioned:

🚨BREAKING! A new image of Imane is surfacing, fueling the scandal about Imane allegedly being a man.

Thoughts?

This is how the post appeared at the time of writing:

(Source: X screenshot taken on Mon Sep 9 23:16:33 2024 UTC)

The scope of this fact check is the authenticity of this image. Additional Lead Stories fact checks on false claims about Imane Khelif's gender can be found here.

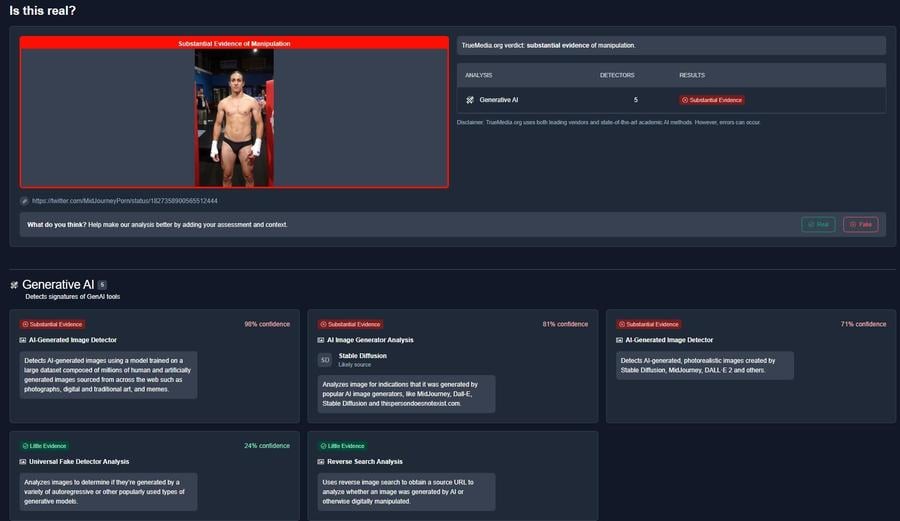

Lead Stories tested the image (pictured above) with the AI detection tools at TrueMedia.org (see full report here). The analysis of the low-quality image posted by @DrClownPhD did not yield conclusive results. Lead Stories asked the team at TrueMedia to give this query human review, and they uncovered a much earlier version of this image that had been posted on X on August 24, 2024.

The original inconclusive analysis of the @DrClownPhD image was revised with the findings from the human analyst. This earlier image had a much higher resolution and was not as blurry. Lead Stories made a second query to TrueMedia.org with the better-quality image and this time the tools (see full report here) found "Substantial Evidence of Manipulation" in just a few minutes. The detection tools used by TrueMedia.org have a variety of specialties. One of the five detection tools, the AI-Generated Image Detector, returned a 98 percent confidence score that the image was AI generated.

(Source: Screenshot of the second reading from TrueMedia.org taken on Mon Sep 09 23:54:47 2024 UTC)

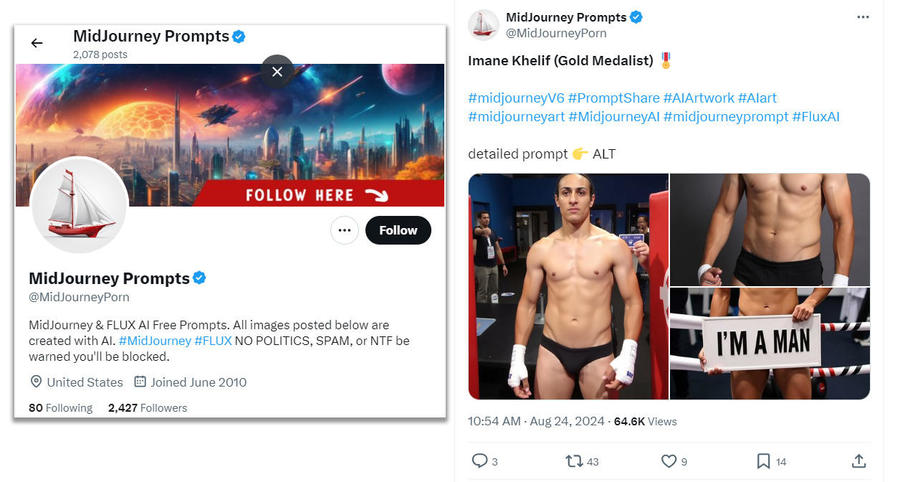

The earliest copy of the AI-generated image found so far is included in a collection of three images (below right) posted on August 24, 2024, on X (archived here) by MidJourney Prompts @MidJourneyPorn (pictured below left). The post was captioned:

#midjourneyV6 #PromptShare #AIArtwork #AIart #midjourneyart #MidjourneyAI #midjourneyprompt #FluxAI

detailed prompt 👉 ALT

The account Midjourney Prompts is described this way in the account bio:

MidJourney & FLUX AI Free Prompts. All images posted below are created with AI. #MidJourney #FLUX NO POLITICS, SPAM, or NTF be warned you'll be blocked.

(Source: Lead Stories composite image with X screenshots taken on Mon Sep 09 23:16:33 2024 UTC)

A September 10, 2024, article published in Kellogg Insight at Northwestern University, "5 Telltale Signs That a Photo Is AI-generated" summarizes an extensive June 2024 report by the same authors (54 page .PDF here). The analysis is broken down into five categories:

We organized this 2024 guide across 5 high level categories in which artifacts and implausibilities emerge in AI-generated images: Anatomical Implausibilities, Stylistic Artifacts, Functional Implausibilities, Violations of Physics, and Sociocultural Implausibilities.

Section 1.1 on page 16 of the .PDF discusses problems with hands. Section 3.4, on page 35, addresses the recent advances made in AI rendering of words:

AI-generated text can appear glyph-like, but be in a nonexistent language or produce nonexistent words, spelling errors, and incomprehensible sentences.

The Lead Stories composite image below shows some details in the image posted by @MidJourneyPorn, focusing on a deformed hand holding a card in an functionally implausible way, and glyph-like text on the card and the exit sign above the door in the background.

(Source: Lead Stories composite image with X screenshot taken on Mon Sep 09 23:16:33 2024 UTC)

Other Lead Stories fact checks focusing on the Summer Olympics can be found here.