So you've probably read all those warnings from pundits, academics and researchers about how generative AI tools like ChatGPT are going to impact disinformation and fact checking. And you may have even seen some recent examples of a deep-faked pope in a puffer jacket or Donald Trump being arrested. But let me tell you the story of a real-life example that is probably even stranger than what those pundits, academics and researchers could have imagined.

Perugia inspiration

First, some context: In April 2023 several members of the Lead Stories team (including myself) attended the International Journalism Festival in Perugia, Italy. It is one of the largest annual gatherings of journalists where thousands of participants come together to listen to dozens of speakers, panels and workshops about all things journalism-related. Unsurprisingly AI was a recurring topic this year, as was (traditionally) disinformation.

The consensus among the speakers was that while generative AI is fantastic at producing well-written, grammatical text it is generally not a good idea to let it write news articles meant for public consumption. AI language models tend to hallucinate facts and references that don't exist in the real world, which is the opposite of what quality journalism is all about.

But AI language models are really good at summarizing or transforming text and that is where many of the speakers we heard in Perugia saw opportunities for journalism. Figuring out the gist of large stacks of documents, summarizing articles into tweets or headlines, that sort of work. That gave me the inspiration to try something.

Trendolizer & ChatGPT

If you've been following Lead Stories the last few years you know we've been using our patented Trendolizer technology to track which stories, videos, etc. are currently going viral online. One way we are using it is to keep an eye on the most viral headlines of a list of websites that we have fact checked several time in the past, in order to spot new viral stories that may be worth fact checking.

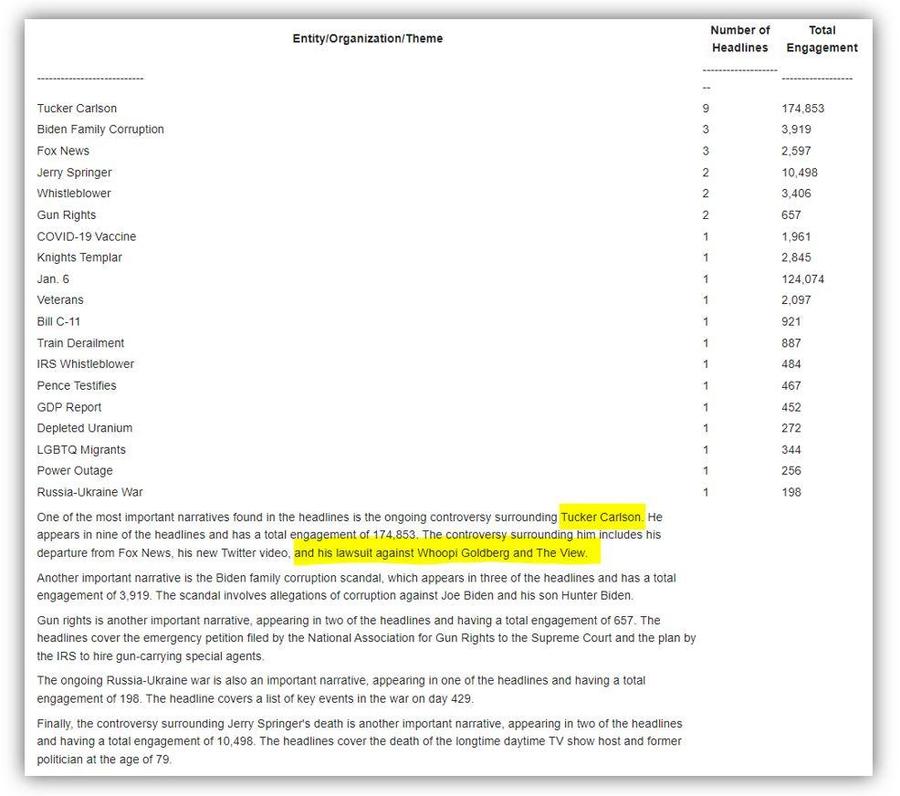

Inspired by the talks in Perugia, I created an experimental script that would pull the 100 most recent headlines from those sites, feeding them to ChatGPT with a prompt asking it to extract the names, organizations, groups, people, etc. that appeared most often and asking it to write a few paragraphs about the main themes or narratives found in the headlines. I set up the script to run every six hours with the replies from ChatGPT sent to my inbox automatically via email. The idea was to see if it could help us find new stories to fact check, and indeed the initial results seem promising.

On April 28, 2023, for example, it sent me a message that looked like this (highlights mine):

This was just four days after the announcement that Tucker Carlson and Fox News had parted ways, a story that had caused big waves online and elsewhere. But a lawsuit against "The View" and host Whoopi Goldberg? That was new.

Remember: This information was pulled from a list of headlines from sites that had published false information in the past. So it wouldn't be surprising if it was false.

Tracking down the story

My initial suspicion was that it must have come from one of the sites run by Christopher Blair, a well-known liberal satirist who has been trolling conservatives and Republicans for years with made-up, over-the-top headlines that do really well on social media. The actual articles always contain a multitude of hints they are fake, and the sites he publishes them on often have satire disclaimers. Lead Stories has debunked scores of stories from him over the years, including several about Whoopi Goldberg and supposed lawsuits against "The View."

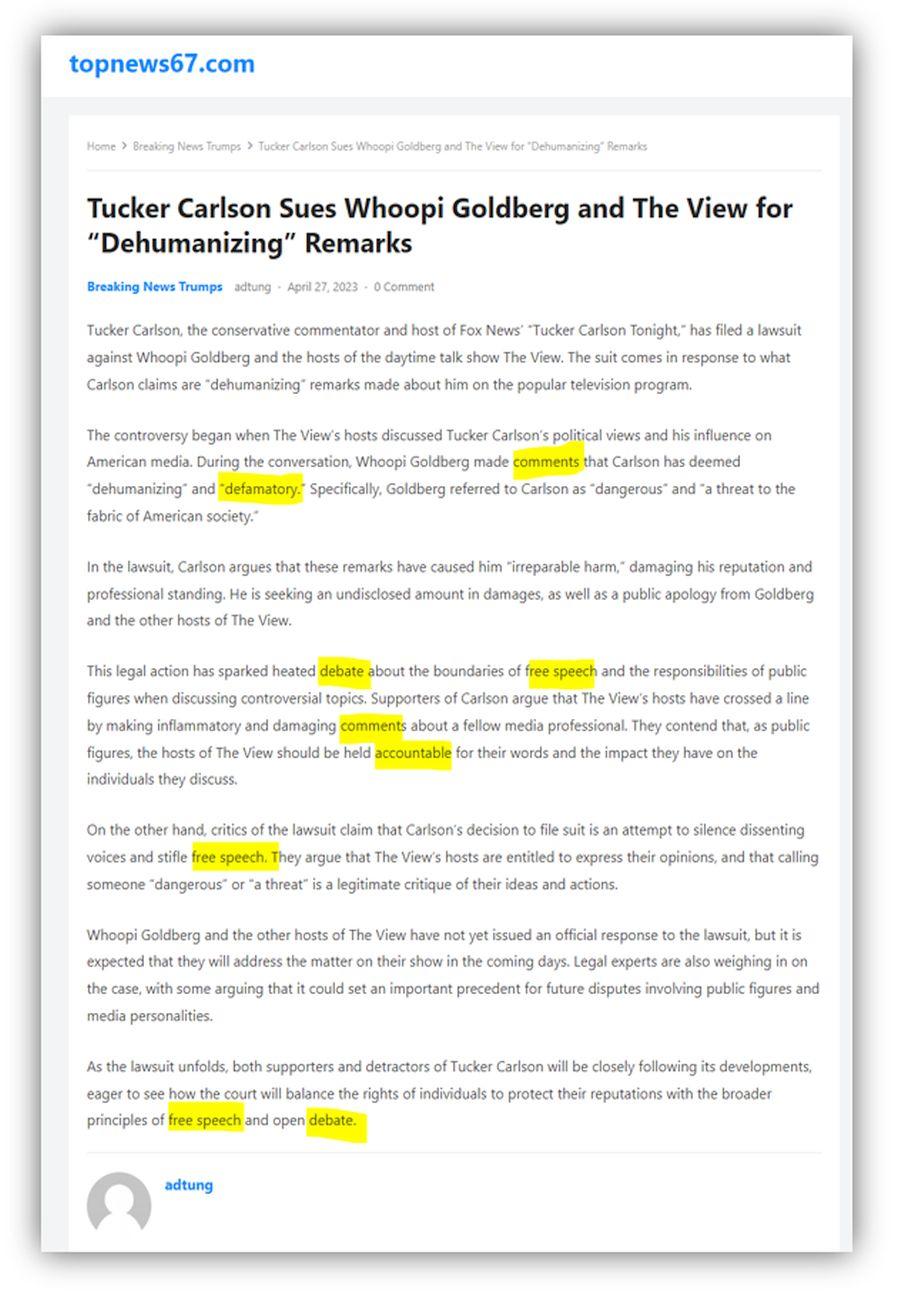

I spent some time searching for stories mentioning "Goldberg," "Tucker" and "lawsuit" using tools like CrowdTangle, Trendolizer and Google. To my surprise, the first one I found was hosted on a site named topnews67.com (it's been taken down, but it's archived here). It was titled 'Tucker Carlson Sues Whoopi Goldberg and The View for "Dehumanizing" Remarks.' It had none of the hallmarks of a typical Blair story: no disclaimer, no hints, no characters named after friends of Blair (something he does often as an in-joke or homage).

(Source: Screenshot of the topnews67 story as it appears on The Internet Archive on May 8, 2023. Highlights by Lead Stories.)

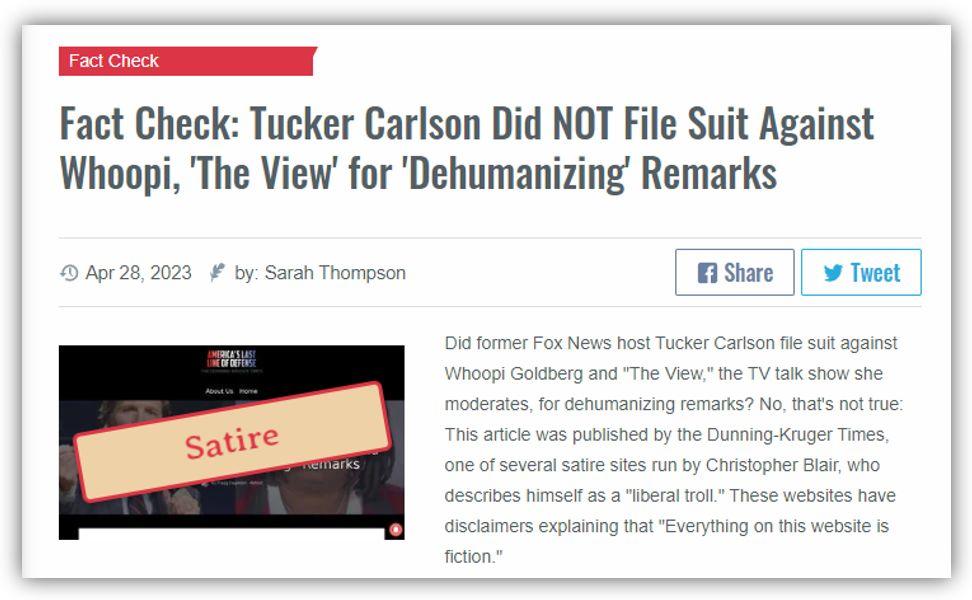

Only a bit later did I find this story on "The Dunning-Kruger Times," one of Blair's sites: 'Tucker Carlson Files Suit Against Whoopi and The View for "Dehumanizing" Remarks' (archived here). That one did have all the trappings of a real Christopher Blair story, including the use of the name "Joe Barron" in the article. The text of the article was completely different and the only difference in the headline was "files suit" instead of "sues."

Once we found the origin of the story it was quick work to have a fact check out:

(Source: screenshot of our own fact check taken on May 8, 2023.)

But wait ...

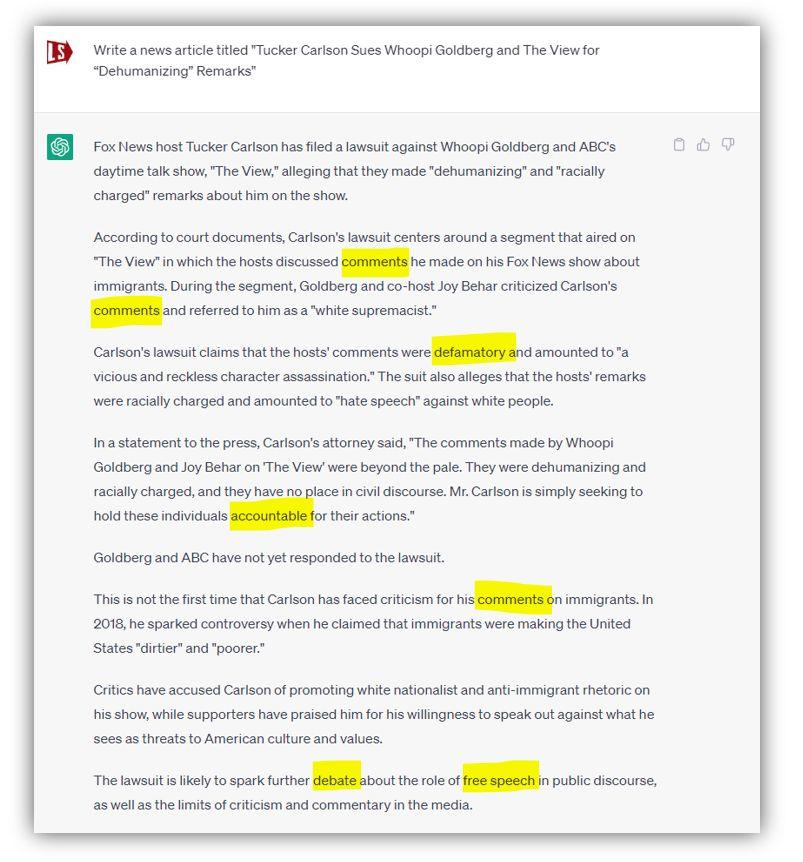

On a hunch I asked ChatGPT to generate an article based on Blair's headline. The output wasn't the exact same article (I expected that given the inherent randomness in ChatGPT). But it did have several identical terms like "comments," "defamatory," "accountable," "free speech" and "debate." None of these terms appeared in Blair's story but they do sound like the kind of words you'd plausibly expect in a story about a lawsuit between media figures over some remarks that were made, exactly what you would expect to see in a text written by a language model that was designed and created to come up with plausible-sounding text.

(Source: Screenshot of ChatGPT output taken on May 8, 2023. Highlights by Lead Stories.)

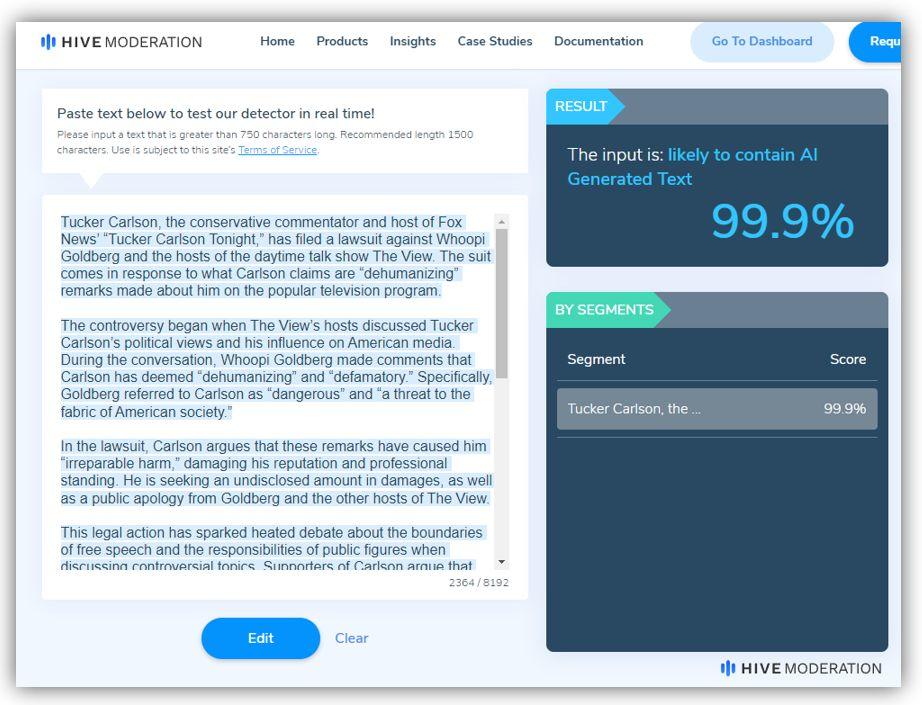

When I put the text of the topnews67.com article through an online tool by Hive Moderation that can be used to detect AI-generated text, it said it was 99.9 percent certain it was indeed generated by AI:

(Source: Screenshot of HiveModeration output taken on May 8, 2023.)

For reference, when I put Blair's story through the same tool it said it was 0 percent likely to have been AI-generated.

New tactic: plag-AI-rism

Many of our past fact checks of Christopher Blair stories (for example this one) have included this paragraph:

Articles from Blair's sites frequently get copied by 'real' fake news sites that omit the satire disclaimer and other hints the stories are fake. One of the most persistent networks of such sites is run by a man from Pakistan named Kashif Shahzad Khokhar (aka 'DashiKashi') who has spammed hundreds of such stolen stories into conservative and right-wing Facebook pages in order to profit from the ad revenue.

His stories have also been lifted by spammers from Macedonia and other places, and Blair has fought back using copyright takedown requests in the past.

Blair's stories are very popular with a certain type of social media spammers because the headlines are usually very good at enticing angry people to like and share them without much thought (or even without clicking through and reading the article). But outright copying the entire story can be quite risky: The copyright notice on Blair's sites literally reads "Copyright 2023 -- Paid Liberal Trolls of America. Steal our stuff and we'll sue."

The new tactic appears to be to just steal most of the headline and leave the article writing to an AI, avoiding the risk of plagiarism charges. I guess you could call this "plag-AI-rism" then?

Reached via direct message on Facebook, Blair confirmed this had been happening with other stories from his websites too, adding:

There's another guy out there doing the same thing but adding Elon to all the headlines.

What a world!